Introduction

Artificial intelligence (AI) has revolutionized the way we interact with technology. From virtual assistants to chatbots, AI-powered tools have become an integral part of our daily lives. In this tutorial, we will show you how to create a simple AI-powered chatbot using Gradio and OpenAI's GPT-3 natural language processing model.

Gradio is a Python library that enables developers to create customizable UI components for machine learning models. It provides an easy-to-use interface for building web-based apps that can run locally or on the cloud. OpenAI's GPT-3 is one of the most advanced natural language processing models available today. It can generate human-like responses to text inputs, making it ideal for chatbot applications.

Let's get started!

Step 1: Install Required Libraries

The first thing you need to do is to create your "app.py" file and then install the required libraries. These include:

gradio: a web app library for creating customizable UI components

openai: a Python wrapper for OpenAI's GPT-3 API

pyttsx3: a Python library for text-to-speech conversion

dotenv: a library for loading environment variables from a .env file

You can install these libraries using pip, like this:

pip install gradio openai pyttsx3 python-dotenv

Step 2: Set Up OpenAI API Key

Next, you need to set up your OpenAI API key. You can get your API key by creating an account on OpenAI's website. Watch this video if you don't know how to get your "API key"

Once you have your API key, create a file called ".env" in your project directory and add the following line:

OPENAI_API_KEY=<your-api-key>

This will load your API key as an environment variable that you can access in your Python code using the dotenv library.

Step 3: Define Chatbot Function

Now, we will define the function that will power our chatbot. Here's the code:

import gradio as gr

import openai

import pyttsx3

from dotenv import load_dotenv

import os

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

messages=[

{"role": "system", "content": "You are a teacher"}

]

def transcribe(audio):

global messages

file = open(audio, "rb")

transcription = openai.Audio.transcribe("whisper-1", file)

print(transcription)

messages.append({"role": "user", "content": transcription["text"]})

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages

)

AImessage = response["choices"][0]["message"]["content"]

engine = pyttsx3.init()

engine.say(AImessage)

engine.runAndWait()

messages.append({"role": "assistant", "content": AImessage})

chat = ''

for message in messages:

if message["role"] != 'system':

chat += message["role"] + ':' + message["content"] + "\n\n"

return chat

ui = gr.Interface(fn=transcribe ,inputs=gr.Audio(source='microphone',type='filepath'), outputs='text')

ui.launch()

This function takes an audio input from a microphone, transcribes it using OpenAI's Audio API, sends it to GPT-3 for processing, and returns the AI-generated response as text. It also converts the response to speech using the pyttsx3 library.

Let's go over the key components of this function:

The "messages" list stores the conversation history between the user and the chatbot. It is initialized with a system message telling the user that they are a teacher.

The "transcribe" function takes an audio file path as input and reads it using the "open()" function. It then transcribes the audio using OpenAI's Audio.transcribe() method and adds the user's message to the "message" list.

The "openai.Completion.create()" method sends the conversation history to GPT-3 for processing and generates a response based on the previous messages.

The "AImessage" variable stores the generated response text. We then use the "pyttsx3" library to convert the text to speech and play it back to the user. Finally, we add the AI-generated response to the "messages" list and format the conversation history as a string that can be returned to the user.

Step 4: Create Gradio Interface

Now that we have our chatbot function defined, we can create a Gradio interface to capture audio input from the user and display the chat history. Here's the code:

ui = gr.Interface(fn=transcribe, inputs=gr.Audio(source='microphone', type='file'), outputs='text')

ui.launch()

This code creates a Gradio interface object and sets the "fn" parameter to our "transcribe" function. We specify that the input type is a microphone audio source and the output type is text. Finally, we launch the interface using the "launch()" method.

Step 5: Run the Chatbot

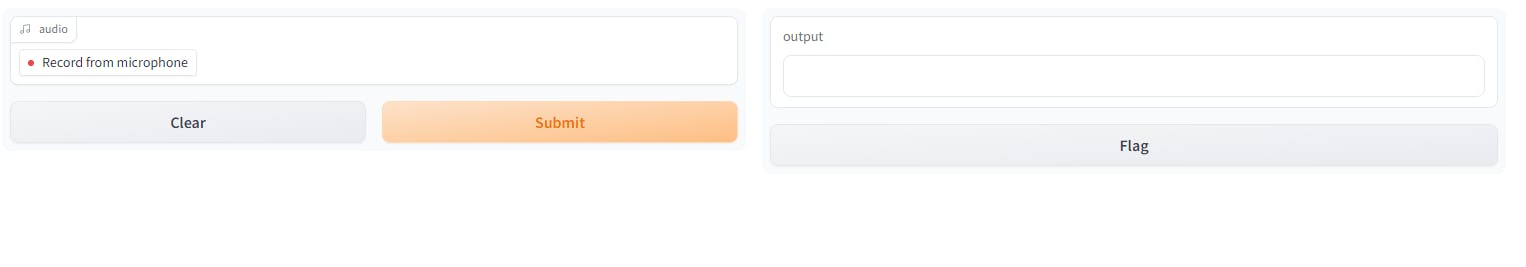

To run the chatbot, simply run the Python script gradio app.py or python app.py incase gradio app.py doesn't work and it will run on http://127.0.0.1:7860. Gradio will launch a web-based interface that allows users to speak into their microphone and receive AI-generated responses in real-time and it will then look like this:

Then you can click on the "Record from microphone" button to start recording your voice command and press "Stop recording" to stop recording and send your voice command to the server else it will return an error; press the "Submit" button to send your voice command and watch the magic that happens. Then click on the "Clear" button to record another voice command again.

Conclusion

In this tutorial, we've learnt how to create a simple AI-powered chatbot using Gradio and OpenAI's GPT-3.5 model. With just a few lines of code, you can build a chatbot that can generate human-like responses to text inputs, making it ideal for a variety of applications. We hope you found this tutorial helpful and we encourage you to explore the many other applications of AI and machine learning in your own projects. Here is the link to the project :